Project Showcase

Low-Resource Language Modelling

About

Abstract

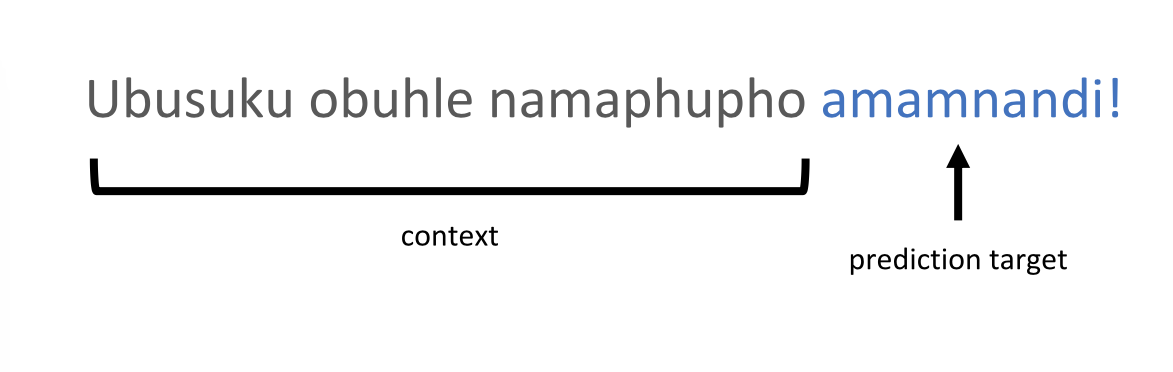

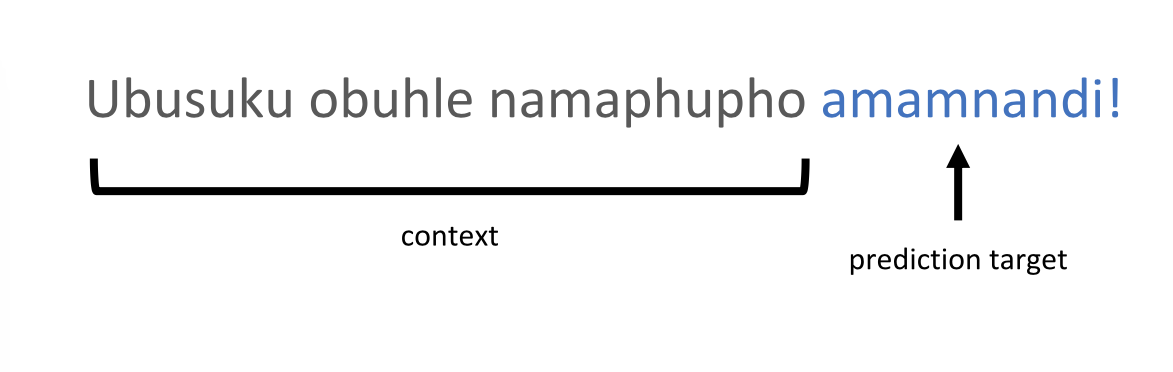

Given a sequence of context words, a language model predicts the next word in the sentence. More formally, a language model assigns a probability to a sequence of words. Modern language models are trained on large datasets, however, many of South Africa’s languages are low resource – there is little text data available for training language models. We evaluate different language models and training methods for modelling South African languages.

Videos 1

Watch presentations, demos, and related content

YouTube

Demo Video

Gallery 1

Explore the visual story of this exhibit

Language Model Graphic