The Dynamic Vehicle Routing Problem

Abstract

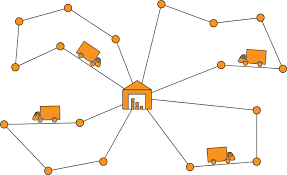

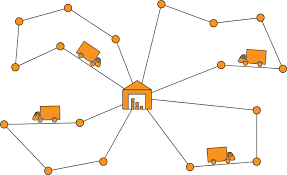

The Dynamic Vehicle Routing Problem (DVRP) extends the classical Vehicle Routing Problem by incorporating dynamic and uncertain elements, such as stochastic customer requests, making it more applicable to real-world logistics. Unlike static routing where all information is known beforehand, DVRP requires algorithms that can adapt as new customers appear during execution whilst respecting vehicle capacity constraints.

This research benchmarks heuristic, metaheuristic, and reinforcement learning approaches to solving the Capacitated Dynamic Vehicle Routing Problem (CDVRP) with stochastic customer arrivals via a Poisson process. Methods evaluated include Ant Colony Optimisation (ACO), Tabu Search, Adaptive Large Neighbourhood Search (ALNS), Clarke-Wright, Proximal Policy Optimisation (PPO), Advantage Actor-Critic (A2C), and Soft Actor-Critic (SAC). Using Solomon's benchmark instances, the algorithms are compared across solution quality, computational time, feasibility, scalability, and success rate under varying problem sizes and parameters.

The findings reveal that whilst reinforcement learning methods offer computational advantages, architectural limitations in neural network design and action space complexity prevent them from matching the solution quality of traditional metaheuristic approaches. This research provides practical insights into algorithm selection for dynamic logistics operations, highlighting important trade-offs between solution optimality and computational efficiency across different operational scenarios.

Videos 1

Watch presentations, demos, and related content

Like, comment, and subscribe on YouTube to support the creator!

Gallery 1

Explore the visual story of this exhibit

DVRP