Abstract

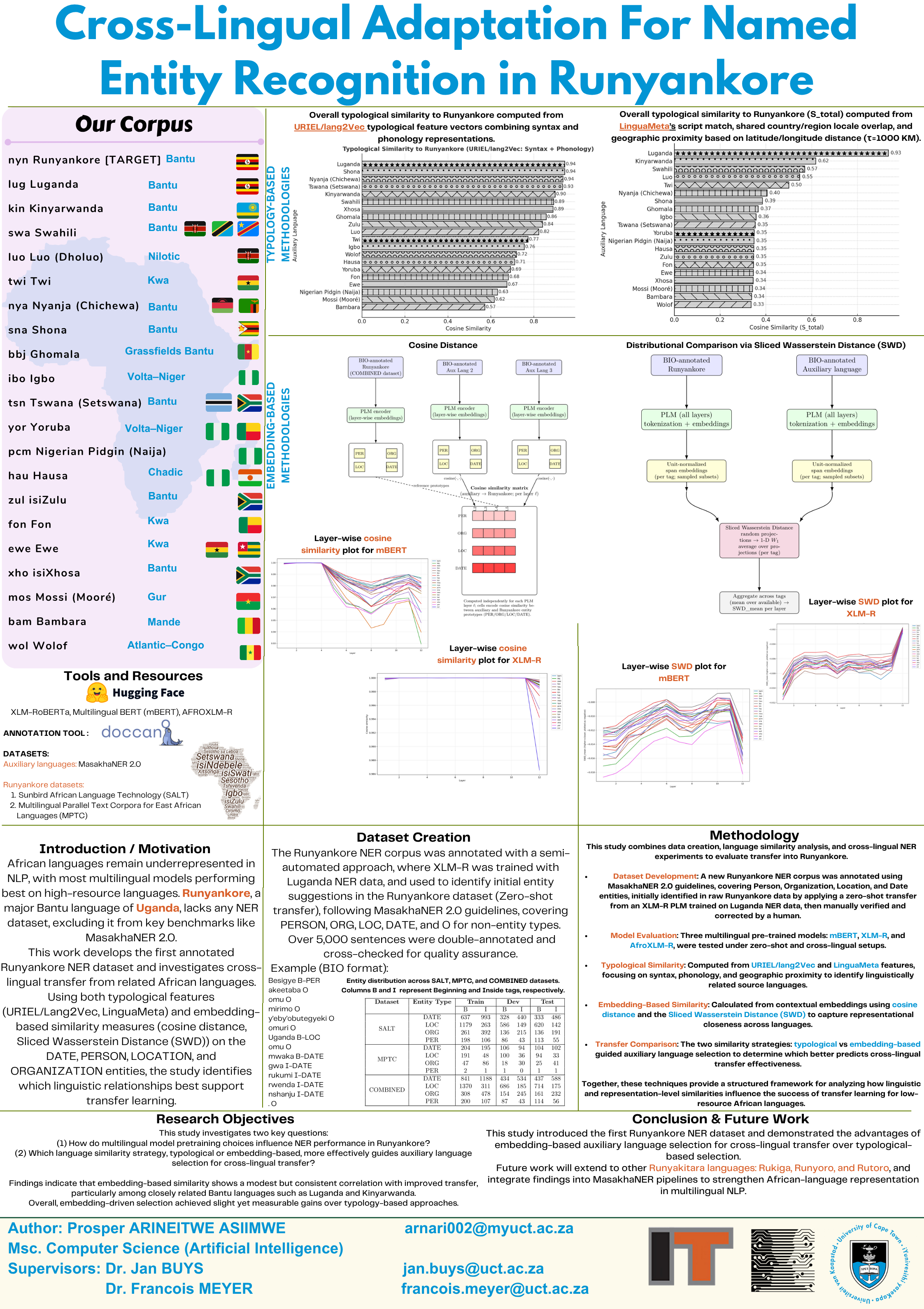

Runyankore, a Bantu language spoken by millions in Uganda, remains critically underrepresented in Natural Language Processing (NLP). Prior to this study, no publicly accessible dataset existed for Named Entity Recognition (NER) in Runyankore, despite the centrality of NER as a foundational NLP task. This work makes three principal contributions toward addressing this gap. First, we present the first manually annotated Runyankore NER dataset, constructed from publicly available sources and developed in accordance with the MasakhaNER 2.0 guidelines, thereby extending ongoing initiatives to build high-quality NLP resources for African languages. Second, we establish baseline performance benchmarks for Runyankore NER using widely adopted multilingual pretrained language models (PLMs), including XLM-RoBERTa, AfroXLM-R, and multilingual BERT (mBERT), evaluated under both zero-shot and cross-lingual transfer settings. Third, we conduct a systematic investigation of auxiliary language selection strategies for cross-lingual transfer. Beyond typological heuristics, we compute sentence and span-level embedding similarities between Runyankore and 20 other African languages from the MasakhaNER 2.0 corpus, leveraging these similarity measures to inform model training configurations. Comparative experiments demonstrate that embedding-based similarity can outperform or complement random and typology-based baselines, yielding measurable improvements in Runyankore NER performance.

Collectively, these contributions deliver the first benchmarked NER model for Runyankore and introduce a reproducible framework for evaluating auxiliary language selection in multilingual transfer learning.

Videos 1

Watch presentations, demos, and related content

Like, comment, and subscribe on YouTube to support the creator!

Gallery 2

Explore the visual story of this exhibit

Title Image

IT-SHOWCASE POSTER.png